Safety Validation

A major challenge to deploying autonomy in safety critical domains is the need for extensive validation and testing. Even if a system is thought to be extremely effective and safe, validation is required to be sure there aren’t any unforseen flaws in the system. The two major approach to safety validation are formal methods, where mathematical proofs of safety are sought, and approximate (or black-box) methods, where sampling is used to undover for failures of the system. While formal approaches lead to the most confident statements regarding safety, they often require significant assumptions and do not scale to very complex systems. Black-box methods can be applied to very complex systems but rarely permit formal statements of safety. Often, a combination of formal and approximate approaches are used to build a strong safety case.

Black-box Safety Validation

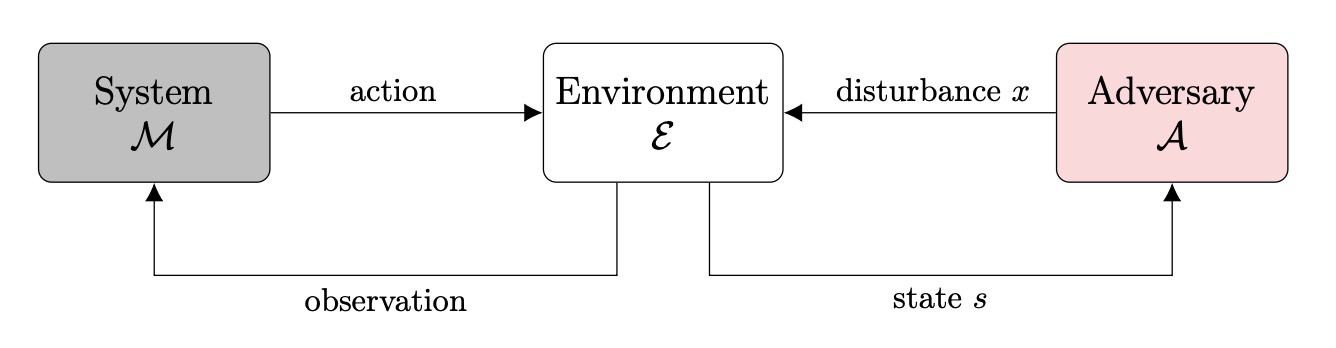

There are many approaches to approximate safety validation. We catalogue many of them in a survey paper Algorithms for Black-Box Safety Validation. First we show that black-box safety validation can be formulated as a Markov decision process where an adversary controls disturbances in an environment where the system under test is trying to perform a task. The dynamics of the envrionemnt and the system-under-test are combined in this formulation.

Then we identify three different common goals that safety validation algorithms achieve:

- Falsification: Where algorithms search for any failure mode of the system.

- Most-Likely Failure Analysis: Where algorithms search for the most likely failure according to some probabilisitc model of the environment.

- Failure Probability Estimation: Where algorithms estimate the probability of a failure event occurring.

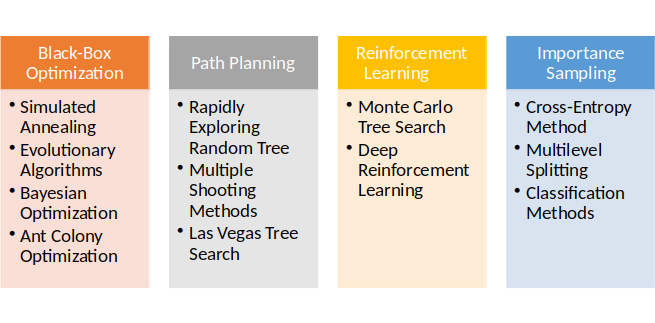

Lastly, we categorize the various algorithms into four categories and explore specific algorithms within each.

Black box approaches have been applied to find failures across a wide variety of domains. Below are some examples of failures of an autonomous vehicle in the CARLA simulation environment:

|  |

|  |

Formal Verification of Image-Based Controllers using Generative Models

Recently, it has become possible formally analyze the input output properties of deep neural networks (see survey here). This innovation has opened the door to formal verification of machine-learning based control systems. A major challenge, however, is how to represent the properities we wish to verify, especially when complex inputs (such as images) are involved.

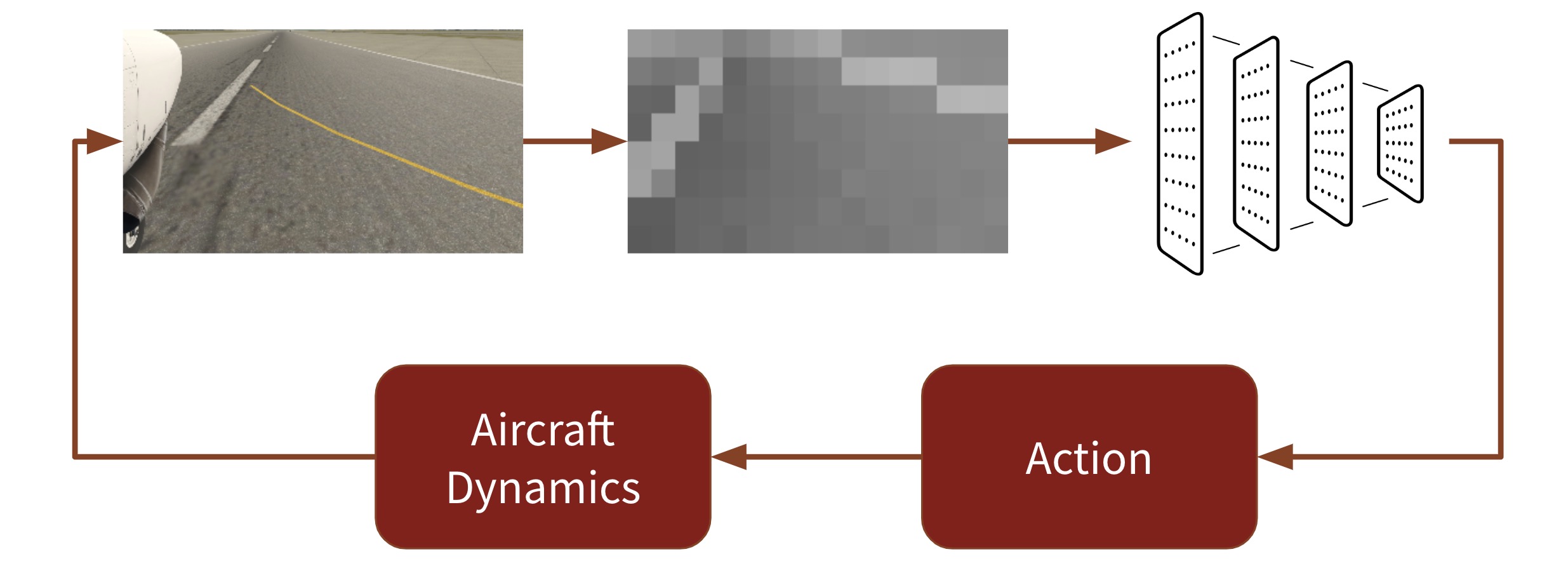

In our recent paper we take first steps toward formally verifying an image-based neural network controller for autonomous aircraft taxiing. The system is depicted below. There is a camera on the wing of a taxiing aircraft taking pictures of the runway. The images are downsampled and processed by a small neural network to produce a control action to keep the aircraft near the center line.

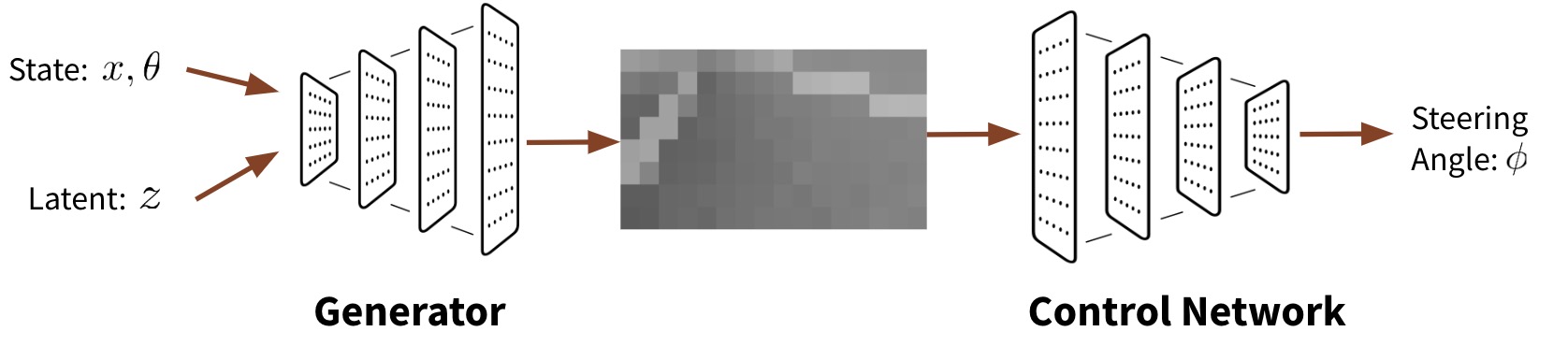

Our key insight for verifying the safe operation of this controller is depecticted below. We want to create a generative model that maps positions on the runway to plausible images experienced by the agent. We then concatenate this model with the controller and ask questions such as “for all possible positions on the runway and plausible images generated by our generative model, will the neural network controller keep the aircraft near the center line?”. We trained a conditional generative adversarial network with images produced from the Xplane 11 aircraft simulator. The gif (left) shows how well the generative model can match the real images experienced by the controller.

Our key insight for verifying the safe operation of this controller is depecticted below. We want to create a generative model that maps positions on the runway to plausible images experienced by the agent. We then concatenate this model with the controller and ask questions such as “for all possible positions on the runway and plausible images generated by our generative model, will the neural network controller keep the aircraft near the center line?”. We trained a conditional generative adversarial network with images produced from the Xplane 11 aircraft simulator. The gif (left) shows how well the generative model can match the real images experienced by the controller.

Using this approach we are able to find the forward reachable set (below) of the aircraft and find that indeed the controller is safe under the distribution of images represented by the generative model.

Ongoing Research

Our ongoing research in safety of autonomous systems includes

- Developing algorithms for model-based validation, where the environment and system under test are both differentiable.

- Developing algorithms for estimating the probability of failure of sequential systems

- Improving the scalability of formal analysis methods